Tinder Taps Its Inner Vegas to Predict Swipe Rights

IN THIS POST-TINDER world, your profile picture is everything. The world swipes right (acceptance!) or swipes left (rejection!) based solely on what your photo looks like. Not what you look like. What your photo looks like. So, when hunting for dates and other forms of conjugation, you better get that photo right. Your future could hinge on whether you choose the pic where you’re hugging the labradoodle or the one where you’re hiking through the woods.

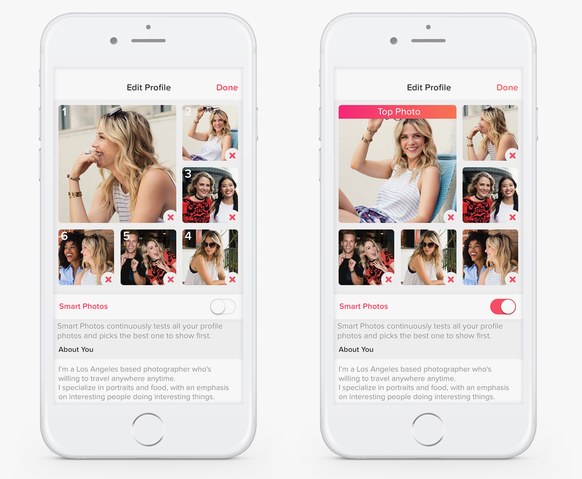

But now, you needn’t sweat this issue quite so much. Today, Tinder introduced a tool it calls Smart Photos. You upload five or six photos into the popular dating app, and through a combination of machine learning and what’s called multi-armed bandit testing, it decides which photos will likely appeal to which people. And it will automatically serve up what it thinks is the right one. In preliminary user testing, the company says, the tool led to a 12 percent uptick in matches. “Users have a lot of photos to express themselves but each one resonates with the person looking at it in a different way,” says Tinder CEO Sean Rad. “Even though you think the photo you pick as the primary photo is your best one, a lot of the time it isn’t.”

The tool is part of an ever-growing movement towards a form of machine learning called deep neural networks—systems that can learn tasks by analyzing vast amounts of data. This technique helps recognize faces and objects in photos posted to Facebook. It helps identify commands spoken into smartphones. And it’s beginning to reinvent machine translation and natural language understanding—not to mention online hookups. But Tinder is also playing into another enormous online trend: A/B testing and other similar ways of testing Internet content on the Internet itself, with real live users. The result is a far more precise way of getting a swipe right.

Smart Photos was the brainchild of Tinder machine learning lead Mike Hall, who proposed the tool at a company hackathon and assembled a team to build a prototype. Tinder’s in-house research team, including a resident sociologist named Jess Carbino, had already drawn up some general rules for the types of photos that don’t work that well—pics where part of your face is covered or you’re not smiling or you’re wearing a hat—but that didn’t account for the subtleties of personal taste. With machine learning, Tinder can reduce the chance that your labradoodle photo turns up on the phone of someone with dog allergies. And with one-armed bandit testing, it can show most people the profile photo that most people find appealing.

Deep neural networks can recognize images in photos, and Tinder says it is also using a machine learning system that recognizes patterns in user behavior, both for individuals and groups of like-minded people. “Given the scale of Tinder’s user base and the high level of engagement from Tinder users,” the company says, “we can start optimizing and personalizing their Tinder experience quickly.” It says that people swipe about 1.4 billion times a day.

READ MORE: https://www.wired.com/2016/10/tinder-taps-inner-vegas-guess-people-will-swipe-right/